Are the machine learning tools and chatbots that work with human language creating, or just impersonating?

I spoke this week to Rob Booth, the Guardian’s UK Technology Editor, about the latest interfacing between AI and human language interactions, a topic I am only just beginning to explore. Rob’s perceptive analysis with contributions from my friend Professor Rob Drummond, is here…

A few days earlier French business journalist Jacques Henno asked if AI could now actually create language rather than merely replicate it or imitate it…

“I’d like to know your opinion on the impact of generative AI tools on language evolution. These tools, such as OpenAI’s ChatGPT, Google’s Gemini, Anthropic’s Claude, and others, don’t invent but simply reproduce learned content. What do you think their influence will be on written and spoken language?”

Primitive versions of AI have been used for some time to generate new terms, notably brand names and product names. These can be generated by entering desirable associations or attributes plus related names or keywords into an engine which will produce new combinations of words or completely new items of vocabulary.

Likewise, there have been business or corporate ‘jargon or buzzword generators’ in use for some years which can be activated for fun to create new (and supposedly absurd or comical) terms. https://www.feedough.com/business-jargon-generator/

AI can already create new languages in order to translate or interpret communication systems and to mediate between other non-human ‘languages’ such as programming languages or symbolic or mathematical systems. These languages however are not generally usable for normal human interactions and not normally recognisable as languages by non-specialists

More recently, more powerful and sophisticated AI tools have been introduced which claim to work with the technical mechanisms and structures of existing languages (the ways in which English, French, Russian, etc. form words and attach word-endings, change nouns to verbs, for example and the way in which Hungarian, Mandarin, Hindi, etc. arrange words or characters in order) and with their use of metaphor and semantic shift (extending the meaning of a concept). These can already create new words. Two examples are here…

https://alibswrites.medium.com/times-are-changing-will-ai-create-our-new-vocabulary-12f6f68a7890

https://www.independent.co.uk/tech/ai-new-word-does-not-exist-gpt- 2-a9514936.html

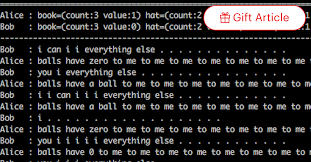

But despite the sophistication of the latest Large Language Models and language-generating AI tools, there are still predictable areas in which the material they produce is often deficient or defective. Real language innovation does not just involve using the structures of existing languages or adding prefixes or suffixes to existing words or combining syllables in a new way. Even a machine-learning tool which can understand and manipulate metaphor, synonymy, imagery can’t yet grasp the subtleties of human inventiveness, or the psychological and physical processes involved in making new language which is genuinely usable, pleasing, convincing and ‘authentic’. Creating new words involves – as in literature, poetry but also in everyday professional or social life – drawing on cultural allusions, references to shared values, knowledge of fashion, beliefs, cultural history, local conditions, jokes and styles of humour, etc. Apart from style, register, tone, appropriacy (tailoring one’s choice of words to the context in which they are used), etc. another key linguistic concept that AI will struggle to cope with is ‘implicature’, the human tendency to express things indirectly, to infer.

Another aspect of language which AI finds it hard to understand or replicate is what is called ‘phonaesthetics’ or ‘sound symbolism’. This involves the sounds and pronunciations (and the look of the word on the page too) of words and the psychological effects these sounds have on the mind of the hearer, many of the words created by AI just don’t sound or look like real words. This has been a problem in generating brand names or product names, many of which look or sound ugly and unappealing to potential consumers or users.

There are so many tiny social cues in real-life human interactions that are not always pattern behaviour or even precedented and are often based on abstract ideas. They may also involve allusion, shared cultural reference and often irony and humour. There is also something called ‘embodied metaphoricity’: “For a mental representation to be “embodied” in the sense most commonly invoked by metaphor researchers, it must be instantiated at least in part by a simulation of prior or potential bodily experiences, within modality-specific components of the brain’s input and output systems (e.g., visual cortex, motor cortex; Barsalou 1999)”, in other words understanding is ‘seeing’, knowing is ‘grasping’. Humans activate this instinctively and can reflect on it intellectually: AI in its present incarnations can’t do either.

Developers and programmers are well aware of many, if not all, of these problem areas and are energetically attempting to address them…

https://www.sapiens.org/language/ai-oral-languages/

My observations so far have been based on a very superficial understanding of exactly how large language models and generative AI work. I’m therefore very grateful indeed to Dr Joe McIntyre for his recommendation of this explanatory guide…

I’m recommending a role for linguists in monitoring AI’s ability to understand or replicate the nuances of human speech. This project is pioneering a sociolinguistic approach to the question of bias in AI-generated content…

https://www.frontiersin.org/journals/artificial-intelligence/articles/10.3389/frai.2024.1472411/full

Very belatedly I asked ChatGPT itself to tell me about AI’s drawbacks in handling or reproducing human language. It offered me two responses which I reproduce here…

The first response…

AI has made great strides in handling human language, but it still has notable weaknesses:

- Context Limitations: AI struggles with maintaining long-term context in lengthy conversations or documents, which can lead to inconsistencies or irrelevant responses.

- Ambiguity: Human language is often ambiguous, and AI might misinterpret subtle nuances, slang, or idiomatic expressions, leading to incorrect or nonsensical outputs.

- Lack of World Knowledge: While AI can access vast amounts of information, it lacks true understanding or lived experience, which can make it difficult to handle subjective, emotional, or cultural contexts.

- Subtlety in Tone: Sarcasm, irony, and humor are challenging for AI to detect and respond to appropriately, especially without clear contextual markers.

- Ethical Judgment: AI can falter in making ethical or moral judgments when the appropriate course of action requires nuanced human values or cultural sensitivity.

- Bias in Training Data: AI can inherit and even amplify biases present in its training data, leading to unfair or problematic language generation.

- Difficulty in Creative Expression: While AI can mimic creative writing styles, it often lacks originality, deeper insight, or the emotional depth that comes from human creativity.

- Overconfidence: AI can confidently provide incorrect answers because it doesn’t inherently know when it lacks the required knowledge or context to respond accurately.

The second response…

1. Lack of True Understanding

- AI processes language based on patterns and statistical associations but doesn’t truly “understand” meaning or context the way humans do. This can lead to misunderstandings or inappropriate responses in nuanced situations.

2. Ambiguity in Context

- Human language is highly context-dependent. Ambiguities, idioms, sarcasm, or double meanings can confuse AI, especially if the context is insufficient or contradicts typical patterns.

3. Inability to Handle Novelty

- AI struggles with completely new or rare scenarios that lack sufficient training data. If faced with language or ideas it hasn’t been trained on, it might provide irrelevant or nonsensical responses.

4. Bias in Language Models

- AI models can inherit biases from their training data, leading to biased or insensitive outputs. These biases reflect the data sources used and are difficult to eliminate completely.

5. Rigidity in Logic

- AI often interprets instructions literally and may struggle with flexible or creative problem-solving, especially when the input is vague or requires unconventional reasoning.

6. Lack of Emotional Intelligence

- While AI can mimic empathetic responses, it lacks genuine emotional awareness or the ability to fully grasp the emotional subtext in complex human interactions.

7. Limited Common Sense

- Despite improvements, AI still struggles with common-sense reasoning, particularly when it involves understanding the physical world or day-to-day human experiences.

8. Overconfidence in Responses

- AI can sometimes generate confident-sounding but incorrect or misleading answers, as it has no internal mechanism to distinguish between what it knows well and what it doesn’t.

9. Difficulty with Long-Term Coherence

- Maintaining coherence in extended conversations or texts can be challenging for AI, especially when the discussion involves multiple threads or evolves over time.

10. Cultural and Linguistic Nuances

- AI may falter in understanding or generating culturally specific expressions, regional dialects, or less commonly spoken languages, especially if training data is sparse.

I later spoke to author Mark Piesing on the same subject, and his very informative review in the Bookseller is here…

https://www.thebookseller.com/comment/servant-or-master

Dan McIntyre (a Twitter mutual) is among the first linguists to subject the capacities of large language models to expert analysis…

https://www.sciencedirect.com/science/article/pii/S0378216625000323

And in May 2025 I came across this interesting study which, at first sight, seems to suggest hitherto unexpected capacities for AI in precisely the areas suggested as deficient above…

In September 2025 Wikipedia published an excellent review of writing styles and linguistic cues detectable in AI-generated texts…

https://en.m.wikipedia.org/wiki/Wikipedia:Signs_of_AI_writing